Google recently released the AJAX Libraries API which allows you to load the popular JavaScript libraries from Google Servers. The benefits of this outlined in the description of the API.

The AJAX Libraries API is a content distribution network and loading architecture for the most popular open source JavaScript libraries. By using the google.load() method, your application has high speed, globally available access to a growing list of the most popular JavaScript open source libraries.

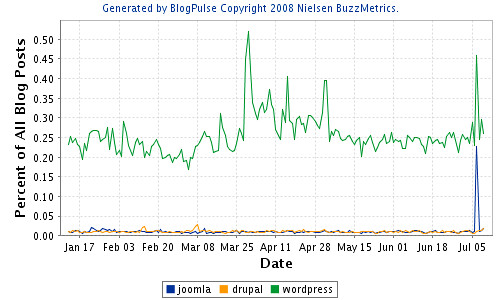

I was thinking of using it for a current project that would use JS heavily, however, since the project used a CMS (Joomla) the main concern for me was really how many times MooTools would be loaded. Joomla uses a PHP based plugin system (which registers observers of events triggered during Joomla code execution) and the loading of JavaScript by multiple plugins can be redundant as there is no central way of knowing which JavaScript library has already been loaded, nor is there a central repository for JavaScript libraries within Joomla.

MooTools is the preferred library for Joomla and in some cases it is loaded 2 or even 3 times redundantly. I did not want our extension to add to that mess. To solve the problem I would test for the existence of MooTools, if (typeof(MooTools) == 'undefined') and load it from Google only if it wasn't available. Now this would have worked well, however, I would have to add the JavaScript for AJAX Libraries API and it would only be loading 1 script, "MooTools", when I also had about 3-4 other custom libraries that I wanted loaded.

Now I thought, why don't I develop a JavaScript loader just like the Google AJAX Libraries API Loader. Should be just a simple function to append a Script element to the document head. So I started with:

function loadJS(src) {

var script = document.createElement('script');

script.src = src;

script.type = 'text/javascript';

timer = setInterval(closure(this, function(script) {

if (document.getElementsByTagName('head')[0]) {

clearTimeout(timer);

document.getElementsByTagName('head')[0].appendChild(script);

}

}, [script]), 50);

}

function closure(obj, fn, params) {

return function() {

fn.apply(obj, params);

};

}

The function loadJS would try to attach a

script element to the document head, each 50 milliseconds until it succeeded.

This works but there is no way of knowing when the JavaScript file was fully loaded. Normally, the way to figure out if a JS file has finished loading from the remote server, is to have the JS file invoke a callback function on the Client JavaScript (aka: JavaScript Remoting). This however means you have to build a callback function into each JavaScript file, which is not what I wanted.

So to fix this problem I though I'd add another Interval with setInterval() to detect when the remote JS file had finished loading by testing a condition that exits when the file has completed. eg: for MooTools it would mean that the Object window.MooTools existed.

So I went about writing a JavaScript library for this, with a somewhat elaborate API, with JS libraries registering their "load condition test" and allowing their remote loading, about 1 wasted hour, (well not wasted if you learn something) only to realize that this wouldn't work for the purpose either. The reason is that it broke the window.onload functionality. Some remote files would load before the window.onload event (cached ones) and others after. This made the JavaScript already written to rely on window.onload fail.

Last Resort, how did Google Do it? I had noted earlier that if you load a JavaScript file with Google's API the file would always load before the window.onload method fired. Here is the simple test: (In the debug output, the google callback always fired first).

google.load("prototype", "1");

google.load("jquery", "1");

google.load("mootools", "1");

google.setOnLoadCallback(function() {

addLoad(function() {

debug('google.setOnLoadCallback - window.onload');

});

debug('google.setOnLoadCallback')

});

addLoad(function() {

debug('window.onload');

});

debug('end scripts');

I had to take a look at the source code for Google's AJAX Libraries API which is:

http://www.google.com/jsapi to see how they achieved this.

It never occurred to me that you could force the browser to load your JavaScript before the window.onload event so I was a bit baffled. Browsing through their source code I came upon what I was looking for:

function q(a,b,c){if(c){var d;if(a=="script"){d=document.createElement("script");d.type="text/javascript";d.src=b}else if(a=="css"){d=document.createElement("link");d.type="text/css";d.href=b;d.rel="stylesheet"}var e=document.getElementsByTagName("head")[0];if(!e){e=document.body.parentNode.appendChild(document.createElement("head"))}e.appendChild(d)}else{if(a=="script"){document.write('<script src="'+b+'" type="text/javascript"><\/script>')}else if(a=="css"){document.write('<link href="'+b+'" type="text/css" rel="stylesheet"></link>'

)}}}

The code has been minified, so its a bit hard to read. Basically its the same as any javascript remoting code you'd find on the net, the but the part that jumps out is:

var e=document.getElementsByTagName("head")[0];

if(!e){e=document.body.parentNode.appendChild(document.createElement("head"))}

e.appendChild(d)

Notice how it will create a

head Node and append it to the parentNode of the document body if the document

head head does not exist yet.

Now that forces the browser to load the JavaScript right then, no matter what. Now following that method you can load remote JavaScript files dynamically and just used the regular old window.onload event or "domready" event and the files will be available.

Apparently this won't work on all browsers, since Google's code also has the alternative:

document.write('<script src="'+b+'" type="text/javascript"><\/script>')

with a bit of testing, you could discern which browsers worked with which and use that. I'd imagine that the latest browsers would accept the dom method and older ones would need the

document.write

So my JavaScript file loading function became:

function loadJS(src) {

var script = document.createElement('script');

script.src = src;

script.type = 'text/javascript';

var head = document.getElementsByTagName('head')[0];

if (!head) {

head = document.body.parentNode.appendChild(document.createElement('head'));

}

head.appendChild(script);

}

Anyways, I finally got my JavaScript library loader working just as I liked, thanks to the good work done by Google with the AJAX Libraries API.